A/B testing is a powerful tool for evaluating product improvements. With its help, you can reliably estimate the effect even of the atomic changes and make a decision on implementation for all users. In addition, A/B tests provide you with additional objective information about user preferences.

What is A/B Testing?

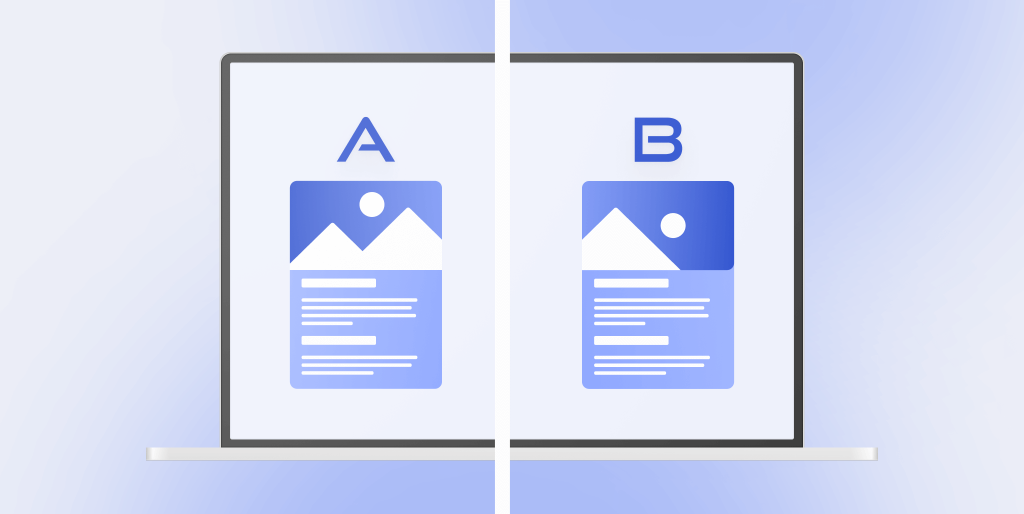

A/B testing allows you to evaluate the quantitative performance of two web page versions. A/B testing (or Split testing) also helps measure the effectiveness of page changes, such as adding new design elements or calls to action. The practical point of using this method is to find and implement the components of a page that will increase its performance. A/B testing is an applied marketing technique that can be used to influence conversions, drive sales, and increase the profitability of a web project.

Split testing begins by evaluating the metrics of an existing web page (A, control page) and looking for ways to improve it. For example, you have created an online store. Imagine this store’s landing page with a 2% conversion rate. The marketer wants to increase this figure to 4%, so they are planning changes that will help solve this problem.

How A/B Testing Works

Let’s start with a simple scheme to consider the concept of A/B testing. It consists of three elements:

- Users;

- Original and experimental pages;

- Analysis.

For example, you have a landing page, which receives traffic from contextual advertising (audience). The designer or your manager has watched a webinar about current trends and they said that it is better to replace rectangular buttons on the page to round ones. Will it positively affect the user experience and help you to sell more?

To find it out, you have to check whether replacing rectangular buttons with round will help increase conversion. It means you need to implement changes, give potential customers a “try” the modified landing page. Then it will be possible to obtain objective data on the change in conversion.

You can test the entire audience or a part of it. The second option is more preferable because then the negative result will have a minimal effect on the volume of orders. Experienced analysts recommend making tests on 5-10% of the audience.

When you’ve created and launched a new page option for the test, it’s time to analyze user behavior and make a decision based on the data obtained. For example, before the conversion was 3-5%, but after replacing the buttons it increased to 8-10%. Then all traffic from contextual advertising is transferred to the new version, and they are happy with the increase in profits.

Thus, A/B tests are comparing the original version (site, application, etc.) with the new one on a group of users, assessing changes in key metrics, and making the final decision on implementing changes.

Why You Should A/B Test

E-Commerce is struggling with abandoned carts, B2B is suffering from low-quality leads, and media and publishers are seeing low engagement. Conversion rates are affected by common problems: conversion leaks, customer loss at the checkout stage, and others. Let’s take a look at how A/B testing can help eliminate these problems.

The main advantages of split tests are affordability and relatively low budget. Depending on the changes to be tested, you can get amazing results without spending a fortune.

If you want to increase the sales volume or the number of registrations, keep the customer on the store page, you need to make split tests.

With low engagement, A/B testing helps to improve conversion rates, assess the usability of the site’s functionality, etc. It allows you to analyze the work at all stages and choose the best solutions to improve it.

Advantages of regular A/B testing

Saving time and resources

To redesign a landing page or newsletter that you are not satisfied with, you will have to involve the entire team and spend a lot of time and resources. It’s much easier and faster to do an A/B hypothesis test, see the result in a couple of weeks, and make one change instead of a complete rework of the product.

Stable ROI

The A/B test improves the performance of the selected channels without major additional costs, and its results will become a guideline for adjustments.

Confidence in the effectiveness of change

A/B test results are specific measurable data and statistics that you will be guided by in the future. Numbers are a much stronger argument than intuition.

Fewer risks

Сhanging your mailing list, campaigns, or site abruptly, you risk breaking what worked and scaring off customers. Test-based spot fixes exactly the things that don’t work. Fast, no drastic rearrangements mean less risk.

Fewer bounces

People leave the site or do not respond to mailings and advertisements for various reasons. As a result of the A/B test, you exclude the things that prompted people to refuse. And the further and more effective the customer experience analysis is, the less reason they have to turn you down.

Insights

It is one thing to build hypotheses in a meeting room and another thing to see the real picture of the world. With A/B tests, you will understand your audience and find out what they really like. Based on this information, you can not only adjust one object but also build full-fledged campaigns.

What Can You A/B Test?

Normally, such a test is applied to anything that can affect user behavior. For example, Netflix uses a split test to set up a personal recommendation page for each user, showing the best possible choices for watching.

A/B test is a useful tool for many professionals. This method is effective for:

Product managers

They test changes in the cost of goods to increase company revenues and optimize the sales funnel.

Marketers

They use split tests to check the audience’s reaction to the marketing elements introduced in the campaign.

Designers

The test helps to see how users will like the changes in graphics and colors, as well as usability and user-friendliness.

Everyone who wants to improve their product, make it more convenient and more pleasant for the target audience needs this tool. A/B testing is necessary to obtain objective information about all possible ways to improve the current version of the product. But this is not always a story about successful testing and constant growth. First of all, you need to think about how not to make the project worse. And this is where A/B tests are the best way possible.

Objective data allows you to move faster in the market. After all, it is the user who knows better what they need here and now. In product development, you cannot rely only on subjective opinions and your own views. It will severely hinder product development. That’s why using A/B testing is necessary for projects (websites, applications, etc.) like air.

A/B Testing Process

A/B testing has a straightforward algorithm that will help you run the test with reliable results.

Formulate a hypothesis

The split test should have a laconic rationale: “At the moment, the conversions are unsatisfactory because this page element is located incorrectly, and if we change its coordinates, conversions may improve.”

Determine the boundaries of change

It is better to test one element at a time. Each modification has its own consequences, which are expressed both qualitatively (better/worse) and in absolute terms (more by 10 conversions, by 100, and so on). If 2 or more elements are modified, the results will not say anything, except for the effect of the combination of changes. At the same time, the effect of each change cannot be traced separately. This is applicable only in the case of creating a site from scratch when nothing is clear at all, and it is required to choose not the design of a separate element but the concept as such, to further refine it.

Determine the duration of the A/B test

The duration depends on the site’s attendance, but in any case, it is better to run testing for at least 2 weeks.

Ensure traffic identity

Try to direct a completely homogeneous audience to both versions of the page. If split testing is carried out using Google Experiments in conjunction with Google Ads, you can set up automatic fulfillment of all necessary conditions because the system itself will divide the traffic. If you use several sources of visits, you should register different link addresses everywhere or use special services for testing.

Monitor the process and analyze the results

When setting up a test, something can go wrong, and it is always important to be aware of what is happening. At the end of the testing process, collect all results and check their statistical significance.

Test setup tools

Website builders may have built-in split testing functionality. You can run an experiment by manually dividing user flows, collecting and analyzing statistics. But several services are specifically designed to run this kind of test.

A/B Testing Calculator

The calculator helps you compare A/B test results across multiple ad campaigns. It is quite simple to assess the following indicators:

Indicator “Yes”

The result of this option is better than that of option A. The settings in the presented segment have shown more effective work.

Indicator “No”

The result of this option is worse than that of option A.

Indicator “?”

The system couldn’t determine the best one between the options under consideration since no significant difference was revealed.

How Long Should Your A/B Tests Run?

Under the best of circumstances, tests take at least two weeks to bring you accurate data. Why? Conversions and web traffic vary greatly based on a few key variables. Marketers often mistakenly end their tests too early, believing they have already received the answer. By jumping to conclusions about which variation is the winner, you will get skewed results, and the test will be ineffective.

Why do you need a test if you already know the answer? By doing an honest test, you have to let the process unfold. To reiterate, always stick to the 95% + rule and do not finish the test until you reach that level of significance or higher. Find a tool for understanding statistical significance and be patient.

How Do You Analyze Your A/B Testing Metrics and Take Action?

When you are sure that your test has collected enough data, reached the required level of statistical significance, and has been running for long enough, it’s time to start the analysis process. The variant (s) you tested will either win or lose. Any outcome is a learning curve that will help you better understand your audience.

A/B Testing & SEO

Before you start split testing, it’s important to understand and remember that SEO testing is different from conversion rate optimization (CRO) and user experience testing. When testing the user experience, you modify the elements of the page to see how the behavior of the visitors changes. With SEO split testing, you work the content of a page to see how the search engine spiders are responding to these changes and find the best solution. The goal of SEO split testing is to drive more organic traffic to the page, not increase your website conversion rates.

What are the Mistakes to Avoid While A/B Testing?

Like any tool, A/B testing requires a careful approach. If this does not happen, mistakes are inevitable, which will cancel out any experiment. Where do marketers stumble when doing A/B tests?

Running a test without a clear hypothesis

Formulate hypotheses correctly before you start the test. By experimenting without a well-defined hypothesis, you face the risk of getting wrong data and start fixing things that were not required. Analyze, understand what is not working, and suggest solutions. Only after this preliminary work, the A/B test will make sense.

There are too many options

By setting an excessive number of variables for the test, you run the risk of not knowing what exactly affects the conversion. After all, everything affects it to varying degrees. Stay focused and prioritize for more accurate data. Test 2 email or creative options at the same time. Remember that the more variables there are, the lower is the accuracy of the test.

Invalid test date

It doesn’t matter if the test went too long or too short, the experiment failed in both cases. Determine the same time frame that will show the most correct variable conversion data. For example, Facebook offers a window of 3 to 14 days. Obviously, 3 days may not be enough, but at least a week should be enough for a split test.

Wrong assessment of external traffic factors

If you are testing one option during a period when the site receives the most traffic and the other during a quiet period, then the test results cannot be used. Vacation seasons, holidays, sales, any period of a spike in traffic is not the best time for an A/B test.

Ignoring statistics in favor of your own feelings

Release your own predictions and trust the numbers in your reports. Even low rates indicate that you need to take a new course and not abandon the instrument. Run each test based on the data from the previous one and keep improving the results until the statistics satisfy you.

A/B Testing Examples

Now it’s time for the proof. Let’s look at 3 examples of successful A/B testing so you can see this process in action.

WallMonkeys

If you’re not familiar with WallMonkeys, they’re a company that sells an incredible variety of wall stickers for homes and businesses.

Goal

WallMonkeys wanted to optimize their homepage for clicks and conversions. It all started with the original home page, which featured a standard-style image with a header overlay.

There was nothing wrong with the original home page. The image was attractive and not too distracting. The headline and CTA seemed to align well with the company’s goals.

First, WallMonkeys launched Crazy Egg heat maps to see how users navigate the homepage. Heatmaps and scrollmaps let you decide where you want to focus your attention. If you see a lot of clicks or scrolls, you know people are being drawn to those places on your site.

Result

After generating user behavior reports, WallMonkeys decided to run an A/B test. The company placed a stock image over a fancier picture. It would show visitors the features they could enjoy with WallMonkeys products.

However, WallMonkeys wanted to continue testing. For the next check, the business replaced its slider with a prominent search bar. The idea was that customers would be more inclined to search for products of particular interest.

Electronic Arts

When Electronic Arts, a successful media company, released a new version of one of its most popular games, they wanted to be better than ever. The homepage of SimCity 5, a simulation game that allows players to build and manage their own cities, is sure to be successful in terms of sales. Electronic Arts wanted to capitalize on its popularity.

According to HubSpot, Electronic Arts relied on A/B testing to properly design their sales page for SimCity 5.

Goal

This A/B testing example was based on improving sales. Electronics Arts wanted to maximize the revenue from the game immediately after its release, as well as through pre-sales.

These days, people can buy and download games right away. The digital revolution has made these plastic-cased CDs virtually obsolete. Especially since companies like Electronic Arts are promoting digital downloads. It is cheaper for the company and more convenient for the consumer.

However, sometimes the smallest things can affect conversions. Electronic Arts wanted to test different versions of its page to determine how it could increase sales exponentially.

Result

The control version of the pre-order page offered a 20 percent discount on a future purchase for anyone who bought SimCity 5. The change removed the pre-order incentive.

As it turned out, the variation showed a result of more than 40% better than the control version. SimCity 5 fans weren’t interested in the stimulus. They just wanted to buy the game. As a result of the A/B test, half of the game’s sales were digital.

Humana

Humana, an insurance company, created a fairly simple A/B test with huge results. The venture had a banner with a simple title and CTA, as well as an image. After doing A/B testing, the company realized that they needed to change a couple of things to make the banner more effective. This is the second of two A/B testing examples that shows that one test is not enough.

Goal

According to Design For Founders, Humana wanted to increase the click-through rate on the above banner. It looked good, but the company suspected that it could improve CTR by making simple changes.

There was a lot of text on the initial banner. The headline had a number that often leads to better conversions and a couple of lines with a bulleted list. This is pretty standard but didn’t give Humana the desired results.

The second option significantly reduced the copy. In addition, the CTA has changed from “In-Store Purchase Plans” to “Start Now”. A couple of other changes, including the image and color scheme, rounded out the differences between the control and the eventual winner.

Result

Simply cleaning up the copy and changing the image resulted in a 433 percent increase in CTR. After the CTA text was changed, the company experienced a further 192 percent increase.

In Conclusion

A/B testing is a simple and intuitive tool that helps you correct websites and ads without much effort and expense. The main thing is to follow a simple experiment algorithm:

- Set a goal;

- Come up with hypotheses;

- Identify options for adjustments to achieve the goal;

- Test your variables;

- Analyze the result. For example, using one of the above tools and metrics;

- Launch the most efficient option to work.

Remember, it is only by being guided by analytics and acting consistently that you will benefit from any research. The A/B test is no exception.